|

| "Whatever you say, Slick, but I need to tell you something about all your skillz..." |

While I'm very happy with / grateful for my computer science degree, I eventually became a software engineer. And the thing about software engineering is that it's related to, but quite distinct from, computer science. Following up on my

previous post about that topic, here's the topics and skills I wasn't taught in my computer science undergrad that proved important in my career so far. Along with each one, I'll try and provide the closest thing I remember from undergrad.

Reading other people's code

Almost no professional software engineering is done alone, which means reading other people's code is basically a necessity. And other people's code can be atrocious. While I learned to write well enough to be understood, the skill of fitting my mind around someone else's mental model to understand their code was rarely exercised.

Incidentally,

one study has suggested that aptitude to learning other programming languages is related to aptitude in learning natural languages significantly more than aptitude in the mathematics that makes up the core of computer science.

Closest I got: We did some debugging exercises which touched on this space, but the biggest match was becoming a teaching assistant (TA) for one of our courses involving a functional programming language gave me a lot of practical experience in understanding other people's code. I recommend it highly.

Choosing what problems to solve

It turns out, there are infinite problems to be solved with computers, and succeeding in a software engineering career involves a certain "nose" for which ones are the most valuable. Growing this skill can involve both introspection and retrospection, and is generally not a challenge that comes with undergrad, where problems will be handed to you until you complete coursework. Any software engineering career track will eventually expect you, the engineer, to generate the problems that should be solved; those are the engineers that a company needs, because they underwrite the bottom line for the next five years.

Closest I got: I undertook an independent study, but unfortunately it put me off of grad school and PhD pursuit. The gaping maw of the unknown was too much to stare at. But over time, I've been able to learn this skill better on-the-job.

Deciding on a solution, designing it, and defending it

With coursework, there is generally one metric by which your solution is evaluated: passing grade. But engineered software will be maintained by other people and often interlocks with a huge edifice of already-existing technology operating closer to or further away from the end-user. The right way to solve these problems is your responsibility to create, and your design will often require explanation or even defense of its fit vs. alternatives. These aren't software skills; they're people skills and they involve understanding not just the system you're building but the people interested in seeing it built, as well as time and cost tradeoffs.

Closest I got: As a TA, I had an opportunity to design new lessons and put them in front of the professor. This was an excellent opportunity to refine my ability to explain and defend something novel I'd created.

Huge systems

Corporate software accretes features. Once something is built, it is incredibly rare for the company to decide to stop doing whatever the thing is that the software supports.

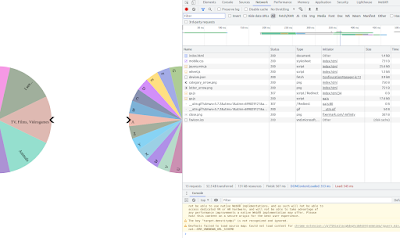

As a result, becoming a member of a large team at a mature company will often mean struggling with software of absolutely enormous complexity. This isn't useful to do to undergrads; pedagogy is better served by approaching the elephant one piece at a time, and any support systems needed for a class tend to be slim and focused. But big-world software (especially web software) is not designed to be understood by one person; that's why companies hire teams, and it's why reading other people's code becomes so important. A huge system requires some limited expertise in a broad skillset, because eventually an abstraction breaks and, for example, you have to care about the network layer to fix the UI, or the 3D rendering layer to build the schema for a new dataset, or the package management layer to write any code at all. And industry software often has to make practical tradeoffs that more abstract, academic software can skip... Does your system have a time limit in which it can operate, because you're building banking software and your peers turn off on weekends? Is your website breaking because you've hit the limit for max URL size (which is implementation-dependent)? Are you building on a system that lacks a floating-point coprocessor (or, for that matter, a "divide" operator)?

Closest I got: Reading the source code and design notes of big systems. The Linux kernel is open-source. So is Docker. So are multiple implementations of MapReduce. Reading and really trying to understand all parts of one of those elephants can give a person perspective on how wide the problem space can be.

Handling user feedback and debugging user bug reports

Real-world systems have real-world users, and users are the greatest way of finding faults that have ever been devised. Software of sufficient quality to pass academic exercises is often not nearly well-tested enough to be easily maintained or improved in the future, or grow to handle new requirements; it's write-and-forget software. Related to this: academic projects that require code often provide relatively straightforward feedback on problems. User feedback is anything but straightforward (when it isn't outright abusive, because users are emotionally angry that your tool got them 99% of the way to where they wanted to be and then ate their data).

Closest I got: While I actually had a few classes that taught expecting the unexpected, nothing was as educational as building real software that real users use. Whether it's maintaining a system relied upon by other students or building a library used by people on GitHub, getting early experience with the bug-debug loop with strangers in that loop is useful.

Instrumentation

More challenging for a software engineer than vague or angry bug reports is the bug report that never comes, where the user just gets frustrated and stops using your software. Software operating at huge scale can't rely on users to have the time or patience to file reports and must police itself. The practice of instrumenting systems (encompassing both robust error capture and reporting and observation of users' interactions with the tools, what pieces they care about vs. what goes unused, whether they often get halfway through a designed flow and stop, etc.) is as much art as science, but it demands its own attention to design and implementation.

Closest I got: This is a big piece that was basically never touched on in undergrad. Debugging exercises were close in that they could involve temporary instrumentation to understand code flow, but this is a skill that I think may be under-served (unless pedagogy has changed in fifteen-odd years).

Automating toil and transforming big blobs of text

While code isn't text, much code is represented as text and mechanically, a software engineer spends a lot of time moving text around. A significant difference between a stagnant software engineer and a growing one is whether they get better at that process. That can involve both practice and recognizing when a particular task is a "hotspot" that is repetitive drudgery enough that it could itself be automated. There's no rule against writing code to write code; half of software is probably exactly that. I like to rely on tools that make this kind of growth easier, and I'll touch on that in a

later blog post.

Closest I got: Computer science exercises tend to try and avoid being the sort of thing that can be solved with moving big blobs of text, trying to instead focus on problems solvable with the right (relatively) few lines in the right place. One exception is an operating system design course I took, and that one gave the most incentive to learn how to quickly replace phrases globally, or move an entire data structure from one form to another (when we the developers didn't expect that change so it wasn't cleanly structured to do it).

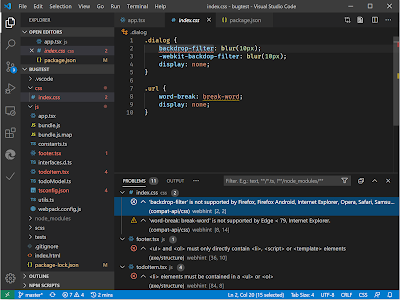

Growing your tools

Related to the previous topic: the industry will change over time, and the tools available to you will change with it. Like any good practicing laborer in a technical field, be it medicine, agriculture, or manufacturing, learning of new tools and improving the ones you already have is a continual exercise. The ride doesn't end until you get off.

Closest I got: Everything and nothing. Improving and seeking to improve one's tools has to become like breathing air to a software engineer; this is the "piano, not physics" part of the practice of programming itself. Better IDEs, better languages, best practices, new frameworks, new ideas... Seek them out, and may they stay exciting to you your entire life.